In this article, we compare the performance of webservers commonly used to implement server-side applications (as opposed to webservers used to serve static content or to be proxies). We look at webservers implemented in Erlang, Go, Java (OpenJDK), and JavaScript (NodeJS).

We test the following webservers:

- Cowboy 1.1.2 with Erlang OTP 22.2

- Cowboy 2.7 with Erlang OTP 22.2

- Mochiweb 2.20.0 with Erlang OTP 22.2

- Go 1.13.5 net/http

- FastHTTP with Go 1.13.5

- NodeJS 13.3.0

- Clustered NodeJS 13.3.0

- Netty 4.1.43 with OpenJDK 13.0.1

- Rapidoid 5.5.5 with OpenJDK 13.0.1

Methodology

To simulate a generic web application behavior, we have devised the following synthetic workload.

The client device opens a connection and sends 100 requests with 900±5% milliseconds in between each one. The server handles a request by sleeping for 100±5% milliseconds, to simulate an interaction with a backend database, and then returns 1 kB of payload.

Without additional delays, this results in an average connection lifetime of 100 seconds, and per-device load averaging 1 request per second and 0.01 connection per second. This workload is expressed by the combination of the following Stressgrid script and “dummy” web applications created for each web server.

0..100 |> Enum.each(fn _ ->

get("/")

delay(900, 0.05)

end)

We test against medium-sized m5.2xlarge instance with 8 vCPUs and 32 GiB of RAM. The test is structured as a continuous 1-hour ramp up from 0 to 300k devices. We chose the 300k number based on the packet-per-second limit of 1.25M exhibited by m5.2xlarge in our previous test.

Following is the calculation that accounts for network packets generated by HTTP request and response (transaction) and establishing and closing HTTP connection:

300k trans/sec * 4 packets/trans + 3k conn/sec * 6 packets/conn = 1.218M packets/sec

By placing the target number of simulated devices at the “hardware” limit, we want to show how the software imposed limit compares to it.

We use Ubuntu 18.04.3 with 4.15.0-1054-aws kernel, and the following sysctld overrides.

fs.file-max = 1000000

net.core.somaxconn = 1024

The workload is using a non-encrypted HTTP and is produced by 40 c5.xlarge Stressgrid generators placed in the same VPC with the target host.

Results

In all tests, with the notable exception of non-clustered NodeJS, the limiting factor was the CPU being fully saturated. In essence, the test has shown that all webservers were scaling to all available CPUs with varying degrees of efficiency. Let’s look at the response-per-second graph.

Go’s FastHTTP came on top by peaking at nearly 210k responses per second. Java-based Netty is a not so distant second with almost 170k. Go’s built-in webserver peaked slightly above 120k, NodeJS cluster at 90k, Erlang-based Cowboy 1.x at 80k. In the 50-60k range, we have another Erlang-based webserver, Mochiweb, then Cowboy 2.x, and Java-based Rapidoid. Finally, non-clustered NodeJS scored 25k.

Clustered NodeJS and Rapidoid both crashed by running out of RAM once overloaded. Other servers, when overloaded, maintained their peak performance except for Mochiweb.

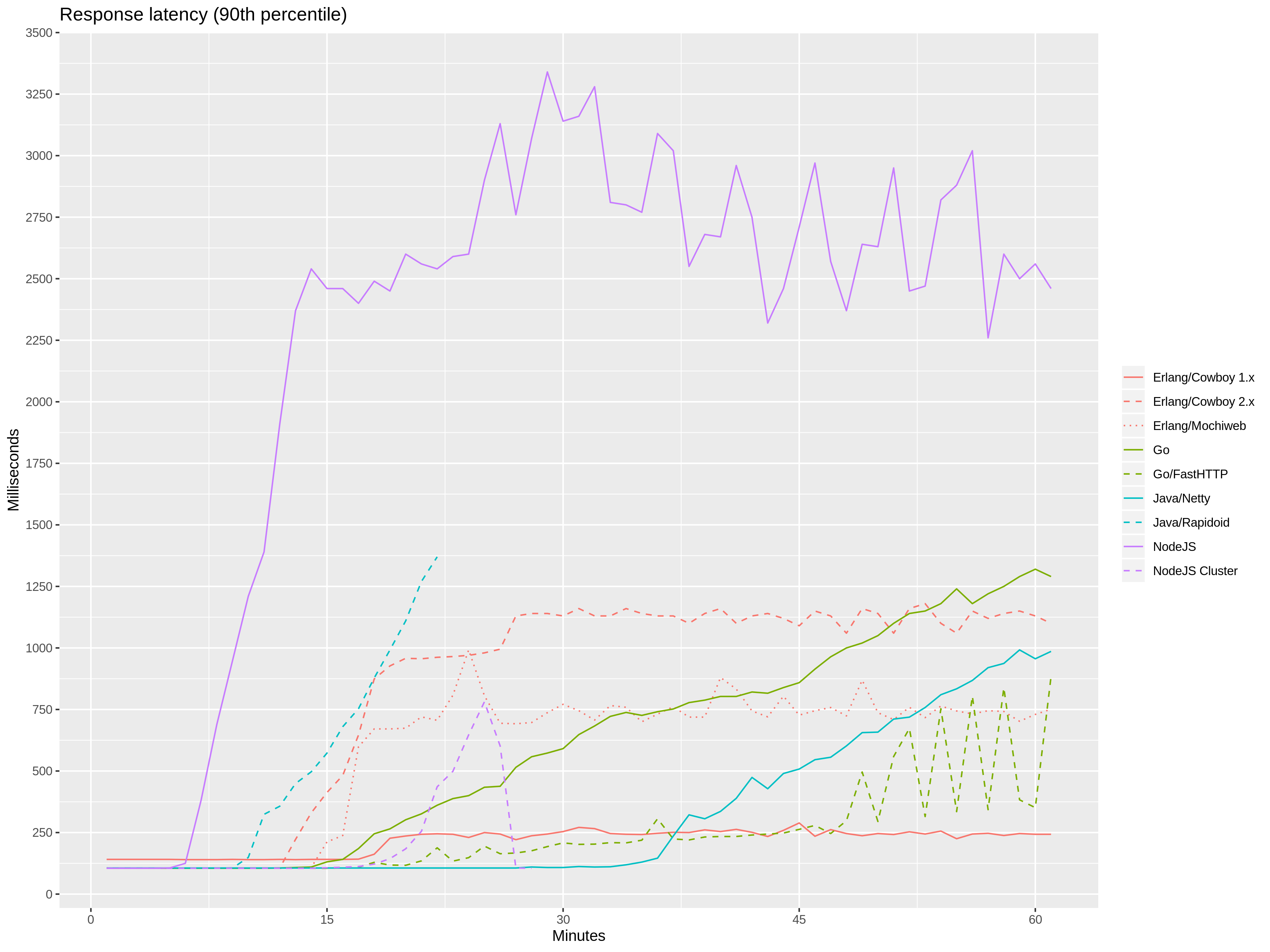

Let’s look at the 90th percentile response latency graph.

On this graph, it is much easier to see how different webservers respond to overload. Notably, all Erlang-based servers, once overloaded, maintained stable response latency, with Cowboy 1.x keeping it around 250 milliseconds! Go and Java servers were getting progressively slower. Non-clustered NodeJS, limited by utilizing only one CPU, was the slowest. The results for clustered NodeJS and Rapidioid were inconclusive since they ran out of available RAM.

Stressgrid get function has a default timeout of 5 seconds. What this means is that the response latency graph only includes responses that were received in under 5 seconds. Let’s look at the rate of timeouts to have a complete picture of what is happening when webservers are overloaded.

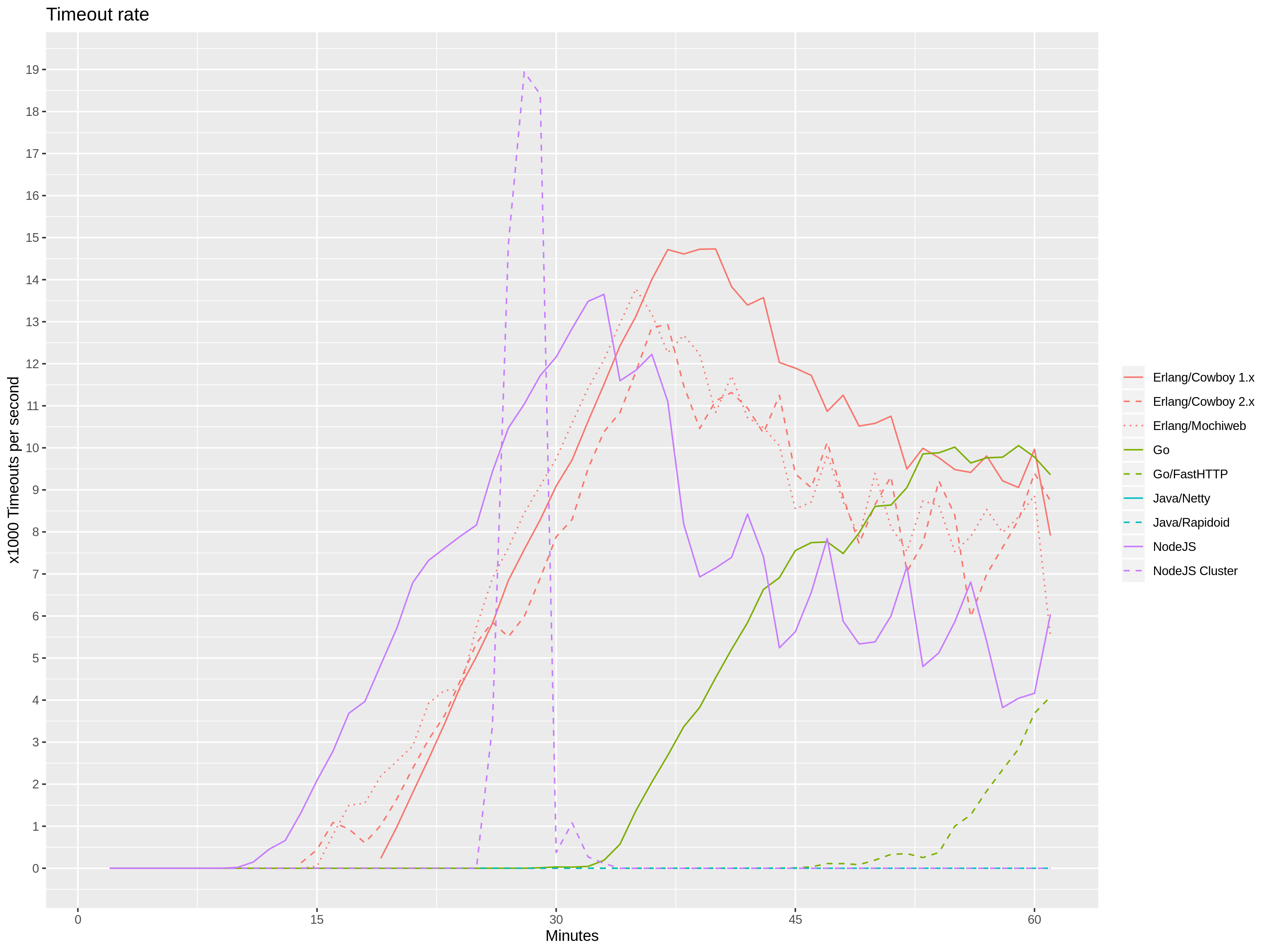

Most notably, Java’s Netty is absent in this graph. This means that Netty maintains mostly equal latency for all requests when overloaded! Erlang servers have a very different approach; they serve a fraction of requests as quickly as possible and let the rest time out. Interestingly, this behavior improves over time with fewer and fewer timeouts. We observe similar behavior for non-clustered NodeJS. Go servers, once overloaded, start timing out as well. Since this happens at the end of the test, we can’t conclude how this behavior changes over time.

Conclusions

This test has shown that modern webservers scale well by being able to utilize all CPUs on a multi-CPU machine. The performance is limited by the efficiency of webserver implementation and the corresponding language runtime or virtual machine.

Go- and Java-based webservers have shown to be the most efficient. Clustered NodeJS is reasonably efficient, but will run out of RAM once overloaded. Erlang webservers were the least efficient, however very stable once overloaded. Surprisingly, Cowboy 1.x is performed significantly better than Cowboy 2.x, which was the reason why we included both in this test. We explore and analyze this anomaly in a dedicated article.

Packet-per-second limits imposed by the EC2 can become an issue when used with efficient webservers like FastHTTP.

In our test, we modeled the backend database interaction as a variable “sleep” delay. In reality, such interaction will consume an additional packet-per-second budget proportionally to the workload. Since the front-end traffic already consumes over 60% of the budget, an addition of just one database interaction for every front-end request will result in oversaturation.