This article is a follow up to part 1 where we survey Cowboy webserver performance. In part 1, to our surprise, we observed that versions 2.x were less performant than 1.x.

In this article, we will analyze the root cause of performance degradation in Cowboy 2. We will also point to some solutions and show their effect on the test results.

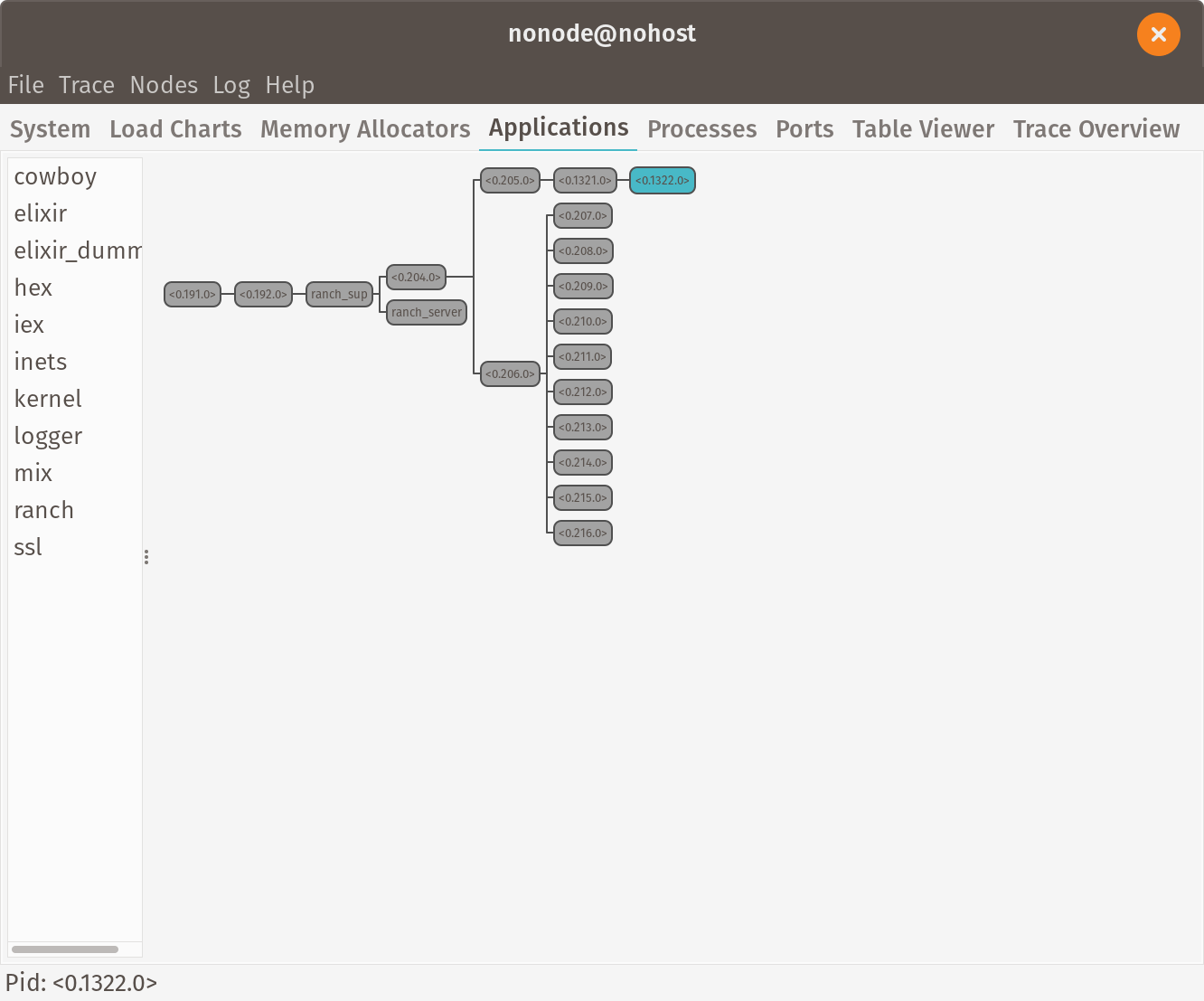

We decided to start the analysis by looking at the Erlang VM supervision tree for our “dummy” web application. To see the supervision tree reflecting the request while it is being handled, we made the handler sleep for several minutes and started the request with curl.

With Cowboy 1, we quickly identified the Ranch connection supervisor (PID 0.200.0) and the connection process that is serving our request (PID 0.364.0), which is running Elixir’s Process.sleep.

Next, we did the same experiment with Cowboy 2.

Here we see Ranch connection supervisor (PID 0.205.0), the connection process (PID 0.1321.0), and then another process (PID 0.1322.0) spawned by the connection process, which is then running Process.sleep. This additional process should undoubtedly introduce an overhead of passing request and response between processes. Why is Cowboy 2 doing this?

The primary goal of Cowboy 2 re-design from Cowboy 1 was to introduce support for HTTP 2.0. With HTTP 2.0, not only it is possible to handle multiple request/response interactions over a single TCP connection, but it is also possible to overlap such interactions. In other words, the client may submit requests without waiting for the response. HTTP 2.0 achieves this by defining a concept of a stream. Multiple streams can be multiplexed over the single TCP connection. Within each stream, the request frame must be followed by the response frame, which makes it logically corresponding to a single HTTP 1.1 connection.

Cowboy 1 defines a straightforward interface for the programmer to write application-level HTTP handlers. The Ranch is responsible for creating a process to handle TCP connection. This process is then responsible for receiving and parsing the request, calling the application handler, and building and writing the response. The application handler is expressed as a single function that, in essence, gets the request as a parameter and returns the response. Since nothing else can happen on the TCP connection during this call, it can be done synchronously.

With HTTP 2.0 and Cowboy 2, this simple design does not work anymore. The process responsible for handling the TCP connection can’t be blocked, waiting for the application handler to respond since it should be able to process other requests and responses concurrently.

There are two possible ways of solving this. One is to change the programmer’s interface and require asynchronous handling. Another is to introduce one more process that is dedicated to handling stream interactions so that we keep the interface simple and mostly compatible with Cowboy 1.

Cowboy 2 does both. Out of the box, it keeps the handler interface simple at the cost of introducing the stream process. It also provides the ability for the programmer to write a stream handler that replaces the default stream handler with all of the corresponding complexity to handle multiple streams asynchronously.

The downside is that HTTP 1.x and HTTP 2.0 share the same design, and therefore, we incur the overhead even if we only need HTTP 1.x.

“Fast” stream handler

To validate our performance theory, we implemented a version of our “dummy” web application that replaces the default Cowboy stream with the “fast” stream that handles the request directly inside of the TCP connection process–just like Cowboy 1 does. (Note that this “fast” stream implementation is a hack and should not be used beyond experimentation.) This approach works nicely for HTTP 1.x servers but may affect the scalability of multiple HTTP 2.0 streams if the handler is blocking.

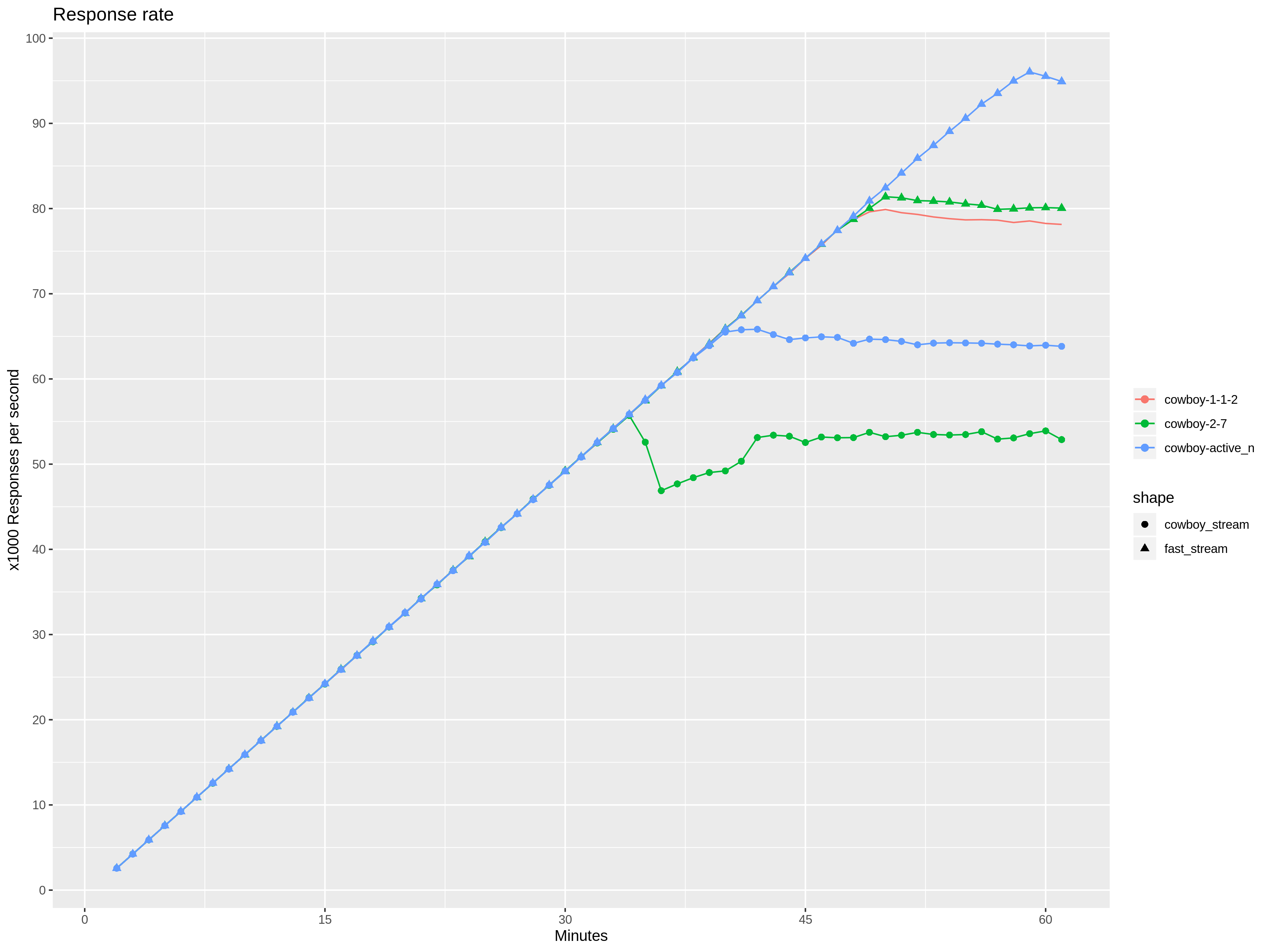

Test results are confirming our theory and show that Cowboy 2.7 performance is comparable to Cowboy 1.1.2 when using the same process architecture! Both reached 80k responses per second, with Cowboy 2.7 being slightly faster.

{active, N}

Another potential for optimization is using {active, N} TCP/TLS socket option. (Note that in TLS this option is only available since OTP 21.3.) In the OTP, active mode means that the process attached to a socket will receive data as messages instead of proactively calling recv function. Cowboy traditionally uses {active, once}, which sends a single message and then resets back to passive mode.

This pull request uses {active, N} instead, which sends N messages before resetting to passive mode, with N currently set 100. This change reduces the number of calls to the OTP port driver by giving up fine-grained flow control.

Test results are showing further improvement. “Fast” stream handler combined with {active, N} change in Cowboy 2.7 reaches 95k responses per second. Just {active, N} change alone improves from 53k to 65k responses per second.

Conclusion

The main finding is that the HTTP 2.0-centric design of Cowboy 2 adds significant overhead even when used with HTTP 1.1. There is a way to work around this by implementing a custom stream handler that processes requests directly in the TCP connection process without spawning a dedicated stream process. With this approach, Cowboy 2.7 performance is comparable to Cowboy 1.1.2. When this approach is combined with {active, N} socket option, Cowboy 2.7 achieves significant performance improvement over Cowboy 1.1.2.